llama.cpp is an open source software library written in C++ that performs inference in several models of large languages, such as Llama. Along with the library, a CLI and a web server are included. It was developed together with the GGML project, a general-purpose tensor library.

It was developed by Georgi Gerganov, a Physics graduate, at the end of 2022 and inspired by LibNC which was created by Fabrice Bellard inventor of FFmpeg and QuickJS.

The difference with LLaMA C++ is that it has no dependencies and works on computers without GPUs and smartphones. LLaMA.cpp uses the GGUF file format which is a binary format that stores tensors and metadata in a single file.

Installation

You can use pre-compiled binaries for Windows, macOS, GNU/Linux and FreeBSD.

Just download it from the releases page. Example for Ubuntu(it works and works on any distro, but you need to have the cURL lib installed):

Example for build version:

3615.

curl -LO https://github.com/ggerganov/llama.cpp/releases/download/b3615/llama-b3615-bin-ubuntu-x64.zip

unzip llama-b3615-bin-ubuntu-x64.zip

cd build

./llama-cli --helpYou can also compile from scratch, that’s how I did it, because this way there are optimizations in the build for your hardware. Just clone the GitHub repository and compile with make:

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

make

./llama-cli --helpUsage

You will need some example template, there are templates for several purposes: Create a chat conversation, Generate programming language code,… And you can search and download them on the website: Hugging Face. Example download for a Chat Template: Qwen1.5-4B-Chat-GGUF

curl -LO 'https://huggingface.co/Qwen/Qwen1.5-4B-Chat-GGUF/resolve/main/qwen1_5-4b-chat-q4_k_m.gguf?download=true'For more information, access the Files and versions tab at the link: https://huggingface.co/Qwen/Qwen1.5-4B-Chat-GGUF.

Now just use llama-cli and run it with the following parameters:

In this example, let’s assume that we want the AI to answer: What is C++? (in English):

./llama-cli -m qwen1_5-4b-chat-q4_k_m.gguf -p "Whats is C++?" -n 128-mto indicate the path of the model;-pto ask the question;- and

-nis used to specify the number of tokens that the model should generate in response to an input. In this case, the value128indicates that the model should generate up to128 tokens.

Please wait, in some cases, depending on your hardware, it may take a while.

Conclusion

I found the project excellent, mainly because you can use it on your machine at no cost and without any free account rules. However, despite all the significant improvements, those who only have a CPU and 2 cores will not have much ease of use, not to mention that this simple question consumed almost 15GB of my RAM.

But that’s because my hardware is really outdated, but it still ran!

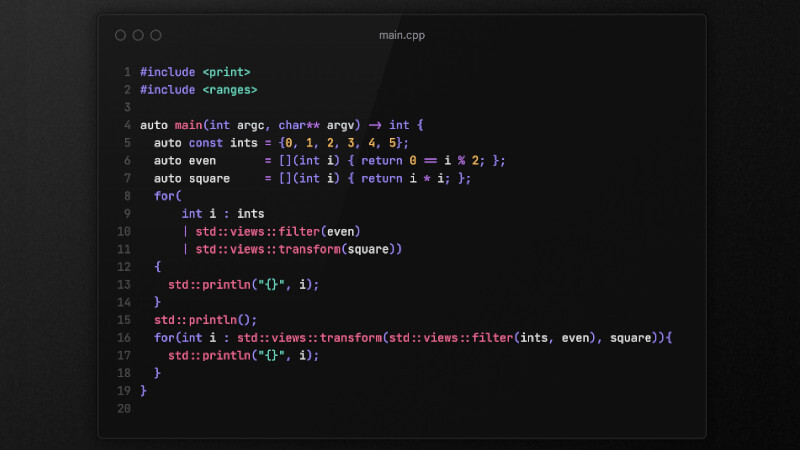

There are some front-ends for LLaMA C++, including a web server and desktop, among them:

- Open Playground, with UI made with TypeScript and generation of scripts and connection to the LLaMA server through Python; + LM Stuudio the desktop application, made with Electron.js available for Windows, macOS and GNU/Linux (via AppImage).

In addition to several others mentioned in the llama.cpp [repository itself), in addition to several other information that you can get there!